Terraform templates to run GCP Cloud Function periodically with Cloud Scheduler

I decided to start using Terraform as solution for the infrastructure as code and to move the first steps with it I’ve decided to create a small project that help to low costs and CO2 emissions of Cloud usage.

The first tool of this project is a small application that can be used to start and stop a Cloud SQL instance on Google Cloud at specific times: for example, it can be used in a development environment to start the database at 8am in the morning and stop it at 7pm when the work day is ended.

The complete code of the project is available on GitHub under the folder ‘cloud-sql-optimizer’.

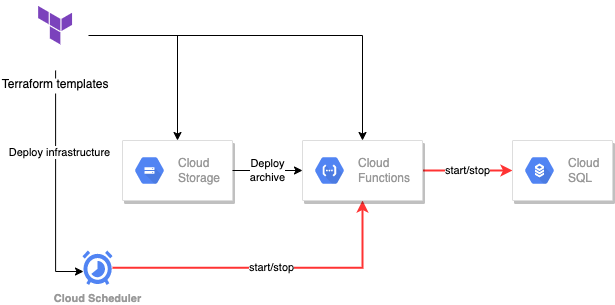

Architecture

Below the high level architecture of the application. The black arrows are related to the deployment of the cloud services and the red ones to the services interactions.

The Terraform templates create the different resources, except the Cloud SQL database that is supposed to exist already.

The components are:

- Cloud Storage: used to store the archive with the function code

- Cloud Scheduler: The service allows to creare easily a cron job. The templates create 2 different schedulers. One to start the database and one to stop it.

- Cloud Function: containing the python code that will start and stop the database

Requirements

This is what is needed to deploy and run the Cloud SQL Optimizer:

- A GCP project

- A service account with Project’s editor and Cloud Function admin roles (the first one to create the resources and the last one to create the invoker role that will be allowed to invoke the function)

- The json credentials file for the above service account

- A Cloud SQL instance database in the GCP project

- Function API, Cloud Scheduler API, Cloud SQL API, Storage API enabled

The Python function code

I’ve mainly used and slightly modified the code provided at https://aus800.com.au/use-cloud-function-to-start-and-stop-cloud-sql/

The function expects a HTTP POST request with a json body having the following structure:

{

"action": <start or stop>,

"db_instance_name": <name of the db instance>

}for example:

{

"action": "stop",

"db_instance_name": "test1"

}The function uses the GCP API and the core part of the code is the function “patch” where the db instance is patched with the specific activationPolicy that can be ALWAYS (to run the instance) or NEVER (to stop it).

The function get_project_id is used to retrieve the id of the project where both the function and the Cloud SQL instance are deployed.

This is the complete code:

The needed libraries are:

- google-api-python-client: for Google’s discovery based APIs. It’s used to interact with the Cloud SQL service.

- google-auth-httplib2, google-auth and oauth2client: used to authenticate the request to the Google APIs

This is the content of requirements.txt:

google-api-python-client==1.10.0

google-auth-httplib2==0.0.4

google-auth==1.19.2

oauth2client==4.1.3The infrastructure templates

The Terraform templates are under the folder ‘terraform’. I use a lot the great examples from https://diarmuid.ie/blog/setting-up-a-recurring-google-cloud-function-with-terraform

There is a variables file template called variables_template.tf where the configuration values will be added (the file can then be renamed to variables.tf):

variable "project_id" {

default = ""

}variable "region" {

default = ""

}variable "zone" {

default = ""

}variable "credentials" {

default = "../../gcp.json"

}variable "db_instance_name" {

default = ""

}

- project_id: id of the project where you want to create the infrastructure

- region: the region (ex: europe-west1)

- zone: the zone inside the region (ex: europe-west1-a)

- credentials: the path to the GCP credentials file (ex: ../../gcp.json)

- db_instance_name: the name of the instance to start or stop (ex: test1)

The backend.tf file specifies that the Terraform state files will be stored locally:

terraform {

backend “local” {}

}The storage.tf template is responsible to create the storage where the archive containing the function code is stored:

resource "google_storage_bucket" "function_bucket" {

name = "${var.project_id}-function"

location = var.region

}The function.tf allows the function creation and the code deployment (from the storage previously created). Also, it defines the service account (‘cloud-function-invoker’) that is allowed to invoke it.

Finally, the cloudtask.tf defines the 2 schedulers.

The start_db_1 starts the instance sending the correct body to the functions url retrieved from the previous template (function.tf). The stop_db_1 stops it 3 minutes later. Both the tasks send the oidc token of the service account allowed to invoke the function.

In the example, the db is started everyday at 23:59 and stopped at 00:02. The configuration can be adapted.

The whole applications can be created running the following commands inside the terraform folder:

terraform init

terraform applyTo cleanup the resources execute the following command always inside the same folder:

terraform destroyAdditional resources

A very good resource to learn more about Terraform templates can be found at https://spacelift.io/blog/terraform-templates.

It includes use cases and examples.

Conclusion

Terraform is a powerful framework to create a dynamic infrastructure as code templates to deploy this simple architecture. The above project can be expanded to deploy several schedulers to manage many databases with at different times.

The next steps will be to create other tools for cost and energy consumption optimization expanding them to Azure and AWS.

Please report any missing or wrong information present in the article for a prompt correction.